Modern 2:Operators, Observables, and Measurment

| Course Wikis | > | Physics Course Wikis | > | Modern 2 |

Contents |

Operators, Observables, and Measurement

physical and abstract vectors

We're going to build up to the definition of an operator, but

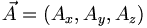

let's start with the idea of physical vectors. These are objects that have a direction and length (or magnitude). The force on an object for example. In a particular coordinate system we can represent this vector as a 3-tuple. E.g.,  . So the 3-tuple is the representation of the vector in a particular coordinate system. In other coordinates there will be other tuples, but the vector itself has an existance independent of the coordinates used.

. So the 3-tuple is the representation of the vector in a particular coordinate system. In other coordinates there will be other tuples, but the vector itself has an existance independent of the coordinates used.

So the way to think of a vector equation such as  is that the object A is mapping one vector into another. If the vectors have the same length, then A can be represented by a square matrix. In the same way, we can think of functions as vectors too. Just as we add vectors component-wise:

is that the object A is mapping one vector into another. If the vectors have the same length, then A can be represented by a square matrix. In the same way, we can think of functions as vectors too. Just as we add vectors component-wise:

![[\vec{x} + \vec{y}] _ i = x_i + y_i](/csm/wiki/images/math/6/b/5/6b5b0ae8acc4837872df10a4359b1553.png)

we add functions point-wise. If f and g are functions of one variable, then

= f(x) + g(x) \](/csm/wiki/images/math/6/e/1/6e1f1358d0bdae8f44017f7668b567ab.png)

The main difference between these two equations is that the functions, in effect, have an infinite number of components, one for each value of the real variable x!

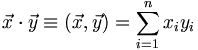

Continuing this analogy, if we have two n-dimensional vectors  and

and  we take the dot-product or inner-product by summing the products of the individual components:

we take the dot-product or inner-product by summing the products of the individual components:

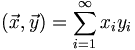

We will use this bracket notation all the time, so please focus on it. Now we can at least consider the possibility of having vectors with an infinite number of components. The main difficulty we would face is that whereas in a finite dimensional space any two vectors have a dot product, in an infinite dimensional space we have to consider whether or not the following infinite series actually converges:

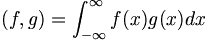

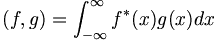

So, how do you suppose we should define the dot product of two continuous functions? Assume we have two real functions defined on the entire real line. Then, assuming the integral converges, we would say:

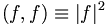

If the functions are complex valued then we need to put a complex conjugate in the definition of the inner product in order that  be real:

be real:

There is a suble difference between vectors that have an infinite number of components which we can lable by integers (countably infinite) and those where the number of components is continuously infinite, such as f(x)

Here is what Mathworld says:

countably infinite uncountably infinite. See also the definition of continuum

These more general sets of vectors all satisfy the formal mathematical definition of vector spaces.

A linear vector space over a set F of scalars is a set of elements V together with a function called addition from  into V and a function called scalar multiplication from

into V and a function called scalar multiplication from  into V satisfying the following conditions for all

into V satisfying the following conditions for all  and all

and all  :

:

- [V1:] (x + y) + z = x + (y + z)

- [V2:] x + y = y + x

- [V3:] There is an element 0 in V such that x + 0 = x for all

.

.

- [V4:] For each

there is an element

there is an element  such that x + ( − x) = 0.

such that x + ( − x) = 0.

- [V5:] α(x + y) = αx + αy

- [V6:] (α + β)x = αx + βx

- [V7:] α(βx) = (αβ)x

- [V8:]

operators

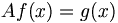

An operator maps a vector into a vector. Henceforth we will use the general definition of a vector. Wavefunctions are examples of vectors. So an operator equation would be of the form

We will only deal with linear operators in this course. This means that any such operator satisfies:

Hermetian operators or matrices

The transpose of a matrix A is denoted by AT and is defined as follows: the i,j components of AT are equal to the j,i components of A.

The Hermitian conjugate or adjoint of a matrix is the complex conjugate of the transpose. This is denoted AH (H for Hermitian conjugate). When referring to operators, the adjoint is sometimes denoted by a dagger instead of an H:  . For our purposes we will treat these notations as indistinguishable. A matrix that is equal to its Hermitian conjugate is said to be a Hermitian matrix, or self-adjoint.

. For our purposes we will treat these notations as indistinguishable. A matrix that is equal to its Hermitian conjugate is said to be a Hermitian matrix, or self-adjoint.

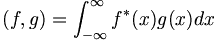

Now, referring back to our definition of the inner product of two complex functions:

The book uses a hat on top of a upper case roman letter to refer to operators, so let  be an operator. Then if the operator is self-adjoing, or Hermitian,

be an operator. Then if the operator is self-adjoing, or Hermitian,

![(\hat{A}f,g) = \int \left[ \hat{A} f\right] ^* g \ d^3 r = \int f^* \left[\hat{A} g\right] d^3r = (f,\hat{A} g)](/csm/wiki/images/math/3/2/6/32627fac05f1185fb20b1aacbd29476a.png) for any pair of functions

for any pair of functions